4Open is a relatively new (but short-lived) open access interdisciplinary journal (voluntary APCs) covering the 4 fields of mathematics, physics, chemistry, and biology-medicine. A special issue on Logical Entropy was sponsored and edited by Giovanni Manfredi, the Research Director of the CNRS Strasbourg. My paper is the introduction to the volume. Here is the abstract.

(Update July 11, 2024) The 4Open journal has decided to shut-down so it is not clear how long this paper will be available on their open access website (link above).

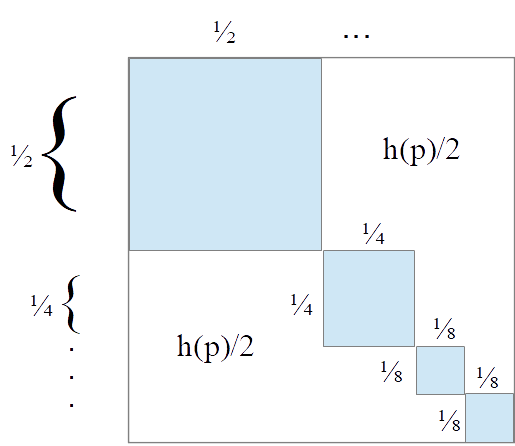

We live in the information age. Claude Shannon, as the father of the information age, gave us a theory of communications that quantified an “amount of information,” but, as he pointed out, “no concept of information itself was defined.” Logical entropy provides that definition. Logical entropy is the natural measure of the notion of information based on distinctions, differences, distinguishability, and diversity. It is the (normalized) quantitative measure of the distinctions of a partition on a set–just as the Boole-Laplace logical probability is the normalized quantitative measure of the elements of a subset of a set. And partitions and subsets are mathematically dual concepts–so the logic of partitions is dual in that sense to the usual Boolean logic of subsets, and hence the name “logical entropy.” The logical entropy of a partition has a simple interpretation as the probability that a distinction or dit (elements in different blocks) is obtained in two independent draws from the underlying set. The Shannon entropy is shown to also be based on this notion of information-as-distinctions; it is the average minimum number of binary partitions (bits) that need to be joined to make all the same distinctions of the given partition. Hence all the concepts of simple, joint, conditional, and mutual logical entropy can be transformed into the corresponding concepts of Shannon entropy by a uniform non-linear dit-bit transform. And finally logical entropy linearizes naturally to the corresponding quantum concept. The quantum logical entropy of an observable applied to a state is the probability that two different eigenvalues are obtained in two independent projective measurements of that observable on that state.