Some basic analogies between subset logic and partition logic

A new logic of partitions has been developed that is dual to ordinary logic when the latter is interpreted as the logic of subsets rather than the logic of propositions. For a finite universe, the logic of subsets gave rise to finite probability theory by assigning to each subset its relative cardinality as a Laplacian probability. The analogous development for the dual logic of partitions gives rise to a notion of logical entropy that is related in a precise manner to Claude Shannon’s entropy. In this manner, the new logic of partitions provides a logical-conceptual foundation for information-theoretic entropy or information content. This post continues the earlier post which introduced some of the basic ideas and operations of partition logic.

A partition on a universe set U (two or more elements to avoid degeneracy) is a set of non-empty subsets (“blocks”)

that are disjoint and jointly exhaust U. A partition may equivalently be viewed as an equivalence relation where the equivalence classes are the blocks. A partition

is refined by a partition

if for every block

, there is a block

such that

. The partitions on U are partially ordered by refinement with the minimum partition or bottom being the indiscrete partition

, nicknamed the “blob,” consisting of U as a single block, and the maximum partition or top being the discrete partition

where each block is a singleton. Join and meet operations are easily defined for this partial order so that the partitions on U form a (non-distributive) lattice (NB: in much of the older literature, the “lattice of partitions” is written “upside down” as the opposite lattice). Then the lattice operations of join and meet can be enriched by other partition operations such as negation, implication, and the (Sheffer) stroke or nand to form a partition algebra.

In the duality between subsets and partitions, outlined in an earlier post, the dual of an “element of a subset” is a “distinction of a partition” where an ordered pair is a distinction or dit of a partition

if u and u’ are in distinct blocks. In the algebra of all partitions on U, the bottom partition

has no dits and the top

has all dits [i.e., all pairs

where

] just as in the analogous powerset Boolean algebra on U, the bottom

has no elements and the top U has all the elements. Let

be the set of distinctions of

. The partial order in the BA of subsets is just inclusion of elements and the refinement ordering of partitions is just the inclusion of distinctions, i.e.,

iff

.

| Table of analogies | Subset concept | Partition concept |

| “Elements” | Elements | Distinctions (dits) |

| All “elements” | Universe U | Discrete partition (all dits) |

| No “elements” | Null set |

Indiscrete partition (no dits) |

| Object or “event” | Subset |

Partition |

| “Event” occurs | Element |

Dit |

| Partial order | Inclusion of elements | Inclusion of dits |

| Lattice of “events” | Lattice of all subsets |

Lattice of all partitions |

Mimicking the development from subset logic to probability theory

With these analogies in hand, we then mimic the development of finite probability theory from subset logic (which goes back to Boole) using the corresponding concepts from partition logic.

For a finite U, the finite (Laplacian) probability of a subset (“event”) is the ratio:

. Analogously, the finite logical entropy (or logical information content)

of a partition

is the relative size of its dit set compared to the whole “closure space”

:

.

If U is an urn with each “ball” in the urn being equiprobable, then is the probability of an element randomly drawn from the urn being in S, and

is the probability that a pair of elements randomly drawn from the urn (with replacement) is a distinction of

.

Let with

being the probability of drawing an element of

. The number of indistinctions (non-distinctions) of

is

so the number of distinctions is

and thus the logical entropy of

is:

since .

The table of analogies can be continued.

| Table of analogies | Subset concept | Partition concept |

| Counting measure (U finite) | # elements in subset S | # dits in partition |

| Normalized count | ||

| Probability interpretation |

In Shannon’s information theory, the entropy of the partition

(with the same probabilities assigned to the blocks) is:

where the log is base 2.

Each entropy can be seen as the probabilistic average of the “block entropies” where the logical block entropy is and the Shannon block entropy is

. To interpret the block entropies, consider a special case where

and every block is the same so there are

equal blocks like

in the partition, e.g., the discrete partition on a set with

elements. The logical entropy of that special equal-block partition is the logical block entropy:

.

Instead of directly counting the distinctions, we could take the number of binary equal-blocked partitions it takes to distinguish all the blocks. As in the game of “twenty questions,” if there is a search for an unknown designated block, then each such binary question reduces the number of blocks by a power of 2 so the minimum number of binary partitions it takes to distinguish all the

blocks is:

.

To precisely relate the block entropies, we solve each for which is then eliminated to obtain:

.

An Example

Consider an example of a set with

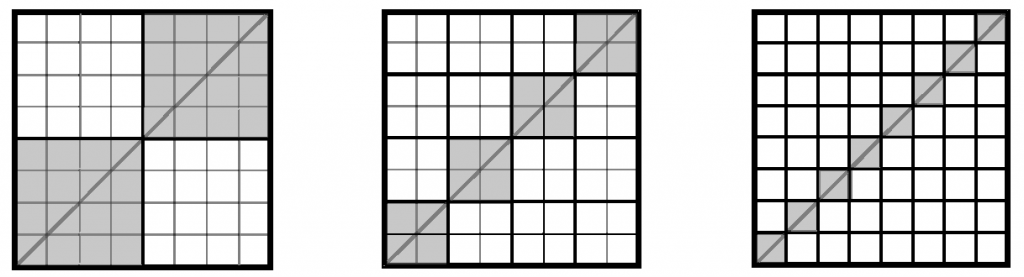

elements so that the Shannon entropy of 3 is the least number of binary partitions it takes to distinguish all the elements of the set. The effects of the three partitions can be illustrated in the following squares.

In terms of the 20 questions game, one could think of the first binary question as asking for the leftmost digit in the binary representation of each number. That gives the first partition, which is represented by the leftmost square. There are 64 squares and the indistinctions or indits of the equipartition of the 8 element set are represented by the 32 shaded squares, while the distinctions or dits of the partition are given by the 32 unshaded squares.

The second binary question asks for the next digit in the binary representation of the number. This yields the second partition so “joining” the information in the two partitions together gives the join

represented in the middle square. Then 16 more squares became unshaded, i.e., 16 additional pairs were distinguished by

, for a total of 32 + 16 = 48 dits.

The third binary question asks for the rightmost digit which yields the third partition, , which is joined to the other two to create the discrete partition

which distinguishes all elements and is represented by the shaded squares on the diagonal in the rightmost square. This partition adds 8 more unshaded squares, i.e., distinguishes 8 more pairs, for a total of 48 + 8 = 56 dits distinguished by the 3 partitions.

Shannon entropy counts the least number of these partitions it takes to distinguish all the elements, , while the logical entropy counts the number of distinctions which are thereby created, i.e., 56, which normalizes to:

. In this

example, the two entropies stand in the relationship of the block entropies:

.

The interpretation of the Shannon block entropy is then extended by analogy to the general case where is not a power of 2 so that the Shannon entropy

is then interpreted as the average number of binary partitions needed to make all the distinctions between the blocks of

—whereas the logical entropy is still the relativized count

of the distinctions created by the partition

.

Concluding remarks

Hence the two notions of entropy boil down to two different ways to count the distinctions of a partition. And thus the concept of a distinction from partition logic provides a logical-conceptual basis for the notion of entropy or information content in information theory. Many of the concepts and relations of Shannon’s information theory, e.g., mutual information, cross entropy, divergence, and the information inequality, can then be developed at the logical level in logical information theory. This and much else is spelled out in: Counting Distinctions: On the Conceptual Foundations of Shannon’s Information Theory. Synthese. 168 (1, May 2009): 119-149, which can be downloaded from this website.