Logical entropy is compared and contrasted with the usual notion of Shannon entropy. Then a semi-algorithmic procedure (from the mathematical folklore) is used to translate the notion of logical entropy at the set level to the corresponding notion of quantum logical entropy at the (Hilbert) vector space level.

4Open: Special Issue: Intro. to Logical Entropy

4Open is a relatively new open access interdisciplinary journal (voluntary APCs) covering the 4 fields of mathematics, physics, chemistry, and biology-medicine. A special issue on Logical Entropy was sponsored and edited by Giovanni Manfredi, the Research Director of the CNRS Strasbourg. My paper is the introduction to the volume.

Talk: New Foundations for Quantum Information Theory

These are the slides for a talk given at the 6th International Conference on New Frontiers in Physics on Crete in August 2017.

Talk: New Foundations for Information Theory

These are the slides for a number of talks on logical information theory as providing new foundations for information theory.

Logical Information Theory: New Foundations for Information Theory

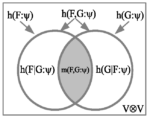

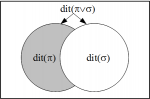

There is a new theory of information based on logic. The definition of Shannon entropy as well as the notions on joint, conditional, and mutual entropy as defined by Shannon can all be derived by a uniform transformation from the corresponding formulas of logical information theory.

Logical Entropy: Introduction to Classical and Quantum Logical Information Theory

Logical information theory is the quantitative version of the logic of partitions just as logical probability theory is the quantitative version of the dual Boolean logic of subsets. The resulting notion of information is about distinctions, differences, and distinguishability, and is formalized as the distinctions of a partition (a pair of points distinguished by the partition). This paper is an introduction to the quantum version of logical information theory.

Information as distinctions

This paper is sub-titled “New Foundations for Information Theory” since it is based on the logical notion of entropy from the logic of partitions. The basic logical idea is that of “distinctions.” Logical entropy is normalized counting measure of the set of distinctions of a partition, and Shannon entropy is the number of binary partitions needed, on average, to make the same distinctions of the partition.

From Partition Logic to Information Theory

A new logic of partitions has been developed that is dual to ordinary logic when the latter is interpreted as the logic of subsets rather than the logic of propositions. For a finite universe, the logic of subsets gave rise to finite probability theory by assigning to each subset its relative cardinality as a Laplacian probability. The analogous development for the dual logic of partitions gives rise to a notion of logical entropy that is related in a precise manner to Claude Shannon’s entropy.